A first-of-its-kind map of renewable energy projects and tree coverage around the world launched today, and it uses generative AI to essentially sharpen images taken from space. It’s all part of a new tool called Satlas from the Allen Institute for AI, founded by Microsoft co-founder Paul Allen.

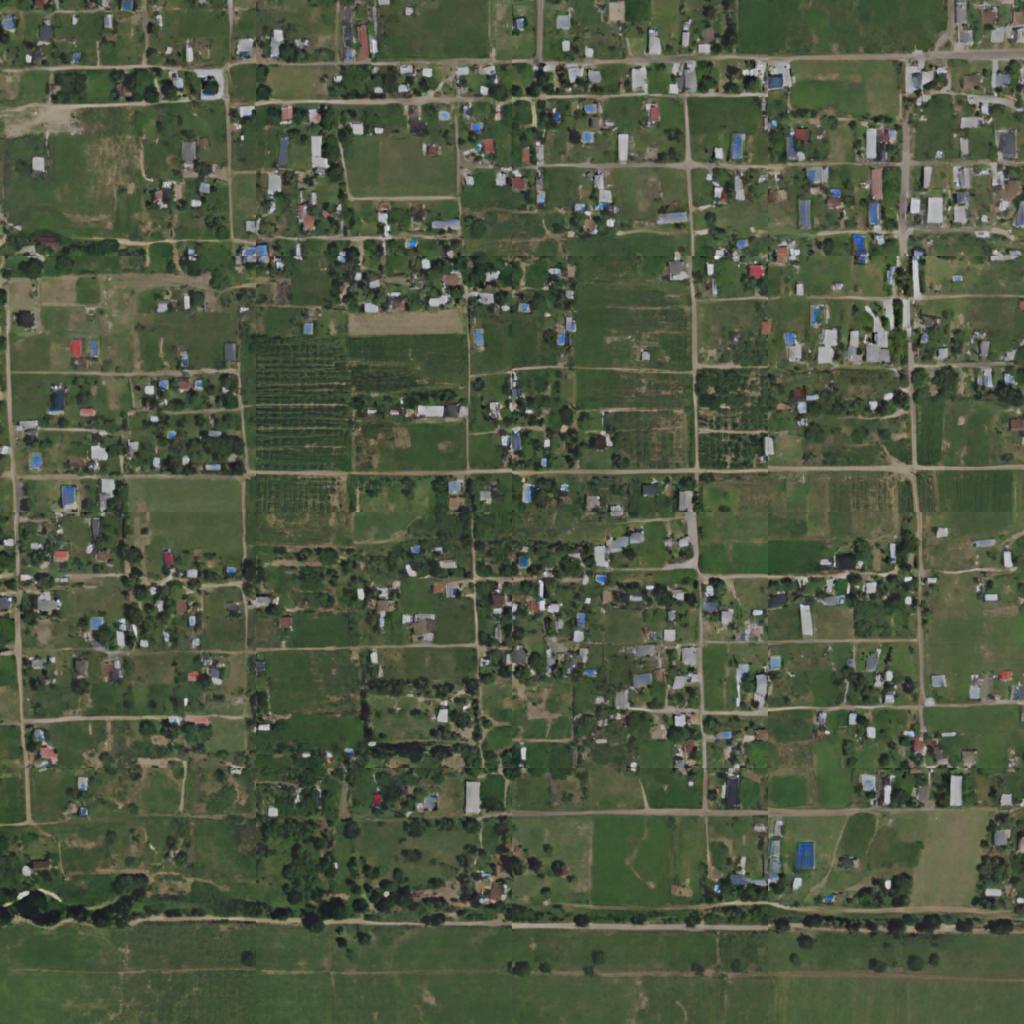

The tool, shared first with The Verge, uses satellite imagery from the European Space Agency’s Sentinel-2 satellites. But those images still give a pretty blurry view of the ground. The fix? A feature called “Super-Resolution.” Basically, it uses deep learning models to fill in details, like what buildings might look like, to generate high-resolution images.

On the left, an AI-generated high-resolution image of Nakuru, Kenya. On the right, a low-resolution image of the same location taken by Sentinel-2. Images courtesy of Allen Institute for AI

For now, Satlas focuses on renewable energy projects and tree cover around the world. The data is updated monthly and includes parts of the planet monitored by Sentinel-2. That includes most of the world except parts of Antarctica and open oceans far from land.

It shows solar farms and onshore and offshore wind turbines. You can also use it to see how tree canopy coverage has changed over time. Those are important insights for policymakers trying to meet climate and other environmental goals. But there’s never been a tool this expansive that’s free to the public, according to the Allen Institute.

This is also likely one of the first demonstrations of super-resolution in a global map, its developers say. To be sure, there are still a few kinks to work out. Like other generative AI models, Satlas is still prone to “hallucination.”

“You can either call it hallucination or poor accuracy, but it was drawing buildings in funny ways,” says Ani Kembhavi, senior director of computer vision at the Allen Institute. “Maybe the building is rectangular and the model might think it is trapezoidal or something.”

That might be due to differences in architecture from region to region that the model isn’t great at predicting. Another common hallucination is placing cars and vessels in places the model thinks they should be based on the images used to train it.

To develop Satlas, the team at the Allen Institute had to manually pour through satellite images to label 36,000 wind turbines, 7,000 offshore platforms, 4,000 solar farms, and 3,000 tree cover canopy percentages. That’s how they trained the deep learning models to recognize those features on their own. For super-resolution, they fed the models many low-resolution images of the same place taken at different times. The model uses those images to predict sub-pixel details in the high-resolution images it generates.

The Allen Institute plans to expand Satlas to provide other kinds of maps, including one that can identify what kinds of crops are planted across the world.

“Our goal was to sort of create a foundation model for monitoring our planet,” Kembhavi says. “And then once we build this foundation model, fine-tune it for specific tasks and then make these AI predictions available to other scientists so that they can study the effects of climate change and other phenomena that are happening on the Earth.”